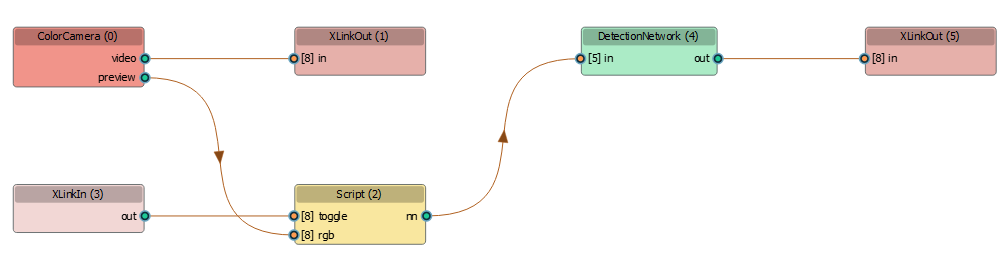

Script change pipeline flow¶

This example shows how you can change the flow of data inside your pipeline in runtime using the Script node. In this example, we send a message from the host to choose whether we want Script node to forwards color frame to the MobileNetDetectionNetwork.

Demo¶

Pipeline Graph¶

Setup¶

Please run the install script to download all required dependencies. Please note that this script must be ran from git context, so you have to download the depthai-python repository first and then run the script

git clone https://github.com/luxonis/depthai-python.git

cd depthai-python/examples

python3 install_requirements.py

For additional information, please follow installation guide

Source code¶

Also available on GitHub

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 | #!/usr/bin/env python3

import depthai as dai

import cv2

from pathlib import Path

import numpy as np

parentDir = Path(__file__).parent

nnPath = str((parentDir / Path('../models/mobilenet-ssd_openvino_2021.4_5shave.blob')).resolve().absolute())

pipeline = dai.Pipeline()

cam = pipeline.createColorCamera()

cam.setBoardSocket(dai.CameraBoardSocket.CAM_A)

cam.setInterleaved(False)

cam.setIspScale(2,3)

cam.setVideoSize(720,720)

cam.setPreviewSize(300,300)

xoutRgb = pipeline.create(dai.node.XLinkOut)

xoutRgb.setStreamName('rgb')

cam.video.link(xoutRgb.input)

script = pipeline.createScript()

xin = pipeline.create(dai.node.XLinkIn)

xin.setStreamName('in')

xin.out.link(script.inputs['toggle'])

cam.preview.link(script.inputs['rgb'])

script.setScript("""

toggle = False

while True:

msg = node.io['toggle'].tryGet()

if msg is not None:

toggle = msg.getData()[0]

node.warn('Toggle! Perform NN inferencing: ' + str(toggle))

frame = node.io['rgb'].get()

if toggle:

node.io['nn'].send(frame)

""")

nn = pipeline.create(dai.node.MobileNetDetectionNetwork)

nn.setBlobPath(nnPath)

script.outputs['nn'].link(nn.input)

xoutNn = pipeline.create(dai.node.XLinkOut)

xoutNn.setStreamName('nn')

nn.out.link(xoutNn.input)

# Connect to device with pipeline

with dai.Device(pipeline) as device:

inQ = device.getInputQueue("in")

qRgb = device.getOutputQueue("rgb")

qNn = device.getOutputQueue("nn")

runNn = False

def frameNorm(frame, bbox):

normVals = np.full(len(bbox), frame.shape[0])

normVals[::2] = frame.shape[1]

return (np.clip(np.array(bbox), 0, 1) * normVals).astype(int)

color = (255, 127, 0)

def drawDetections(frame, detections):

for detection in detections:

bbox = frameNorm(frame, (detection.xmin, detection.ymin, detection.xmax, detection.ymax))

cv2.putText(frame, f"{int(detection.confidence * 100)}%", (bbox[0] + 10, bbox[1] + 20), cv2.FONT_HERSHEY_TRIPLEX, 0.5, color)

cv2.rectangle(frame, (bbox[0], bbox[1]), (bbox[2], bbox[3]), color, 2)

while True:

frame = qRgb.get().getCvFrame()

if qNn.has():

detections = qNn.get().detections

drawDetections(frame, detections)

cv2.putText(frame, f"NN inferencing: {runNn}", (20,20), cv2.FONT_HERSHEY_TRIPLEX, 0.7, color)

cv2.imshow('Color frame', frame)

key = cv2.waitKey(1)

if key == ord('q'):

break

elif key == ord('t'):

runNn = not runNn

print(f"{'Enabling' if runNn else 'Disabling'} NN inferencing")

buf = dai.Buffer()

buf.setData(runNn)

inQ.send(buf)

|

Also available on GitHub

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 | #include <iostream>

// Includes common necessary includes for development using depthai library

#include "depthai/depthai.hpp"

int main() {

dai::Pipeline pipeline;

auto cam = pipeline.create<dai::node::ColorCamera>();

cam->setBoardSocket(dai::CameraBoardSocket::CAM_A);

cam->setInterleaved(false);

cam->setIspScale(2, 3);

cam->setVideoSize(720, 720);

cam->setPreviewSize(300, 300);

auto xoutRgb = pipeline.create<dai::node::XLinkOut>();

xoutRgb->setStreamName("rgb");

cam->video.link(xoutRgb->input);

auto script = pipeline.create<dai::node::Script>();

auto xin = pipeline.create<dai::node::XLinkIn>();

xin->setStreamName("in");

xin->out.link(script->inputs["toggle"]);

cam->preview.link(script->inputs["rgb"]);

script->setScript(R"(

toggle = False

while True:

msg = node.io['toggle'].tryGet()

if msg is not None:

toggle = msg.getData()[0]

node.warn('Toggle! Perform NN inferencing: ' + str(toggle))

frame = node.io['rgb'].get()

if toggle:

node.io['nn'].send(frame)

)");

auto nn = pipeline.create<dai::node::MobileNetDetectionNetwork>();

nn->setBlobPath(BLOB_PATH);

script->outputs["nn"].link(nn->input);

auto xoutNn = pipeline.create<dai::node::XLinkOut>();

xoutNn->setStreamName("nn");

nn->out.link(xoutNn->input);

// Connect to device with pipeline

dai::Device device(pipeline);

auto inQ = device.getInputQueue("in");

auto qRgb = device.getOutputQueue("rgb");

auto qNn = device.getOutputQueue("nn");

bool runNn = false;

auto color = cv::Scalar(255, 127, 0);

auto drawDetections = [color](cv::Mat frame, std::vector<dai::ImgDetection>& detections) {

for(auto& detection : detections) {

int x1 = detection.xmin * frame.cols;

int y1 = detection.ymin * frame.rows;

int x2 = detection.xmax * frame.cols;

int y2 = detection.ymax * frame.rows;

std::stringstream confStr;

confStr << std::fixed << std::setprecision(2) << detection.confidence * 100;

cv::putText(frame, confStr.str(), cv::Point(x1 + 10, y1 + 20), cv::FONT_HERSHEY_TRIPLEX, 0.5, color);

cv::rectangle(frame, cv::Rect(cv::Point(x1, y1), cv::Point(x2, y2)), color, cv::FONT_HERSHEY_SIMPLEX);

}

};

while(true) {

auto frame = qRgb->get<dai::ImgFrame>()->getCvFrame();

auto imgDetections = qNn->tryGet<dai::ImgDetections>();

if(imgDetections != nullptr) {

auto detections = imgDetections->detections;

drawDetections(frame, detections);

}

std::string frameText = "NN inferencing: ";

if(runNn) {

frameText += "On";

} else {

frameText += "Off";

}

cv::putText(frame, frameText, cv::Point(20, 20), cv::FONT_HERSHEY_TRIPLEX, 0.7, color);

cv::imshow("Color frame", frame);

int key = cv::waitKey(1);

if(key == 'q') {

return 0;

} else if(key == 't') {

if(runNn) {

std::cout << "Disabling\n";

} else {

std::cout << "Enabling\n";

}

runNn = !runNn;

auto buf = dai::Buffer();

std::vector<uint8_t> messageData;

messageData.push_back(runNn);

buf.setData(messageData);

inQ->send(buf);

}

}

}

|