RGB Depth alignment¶

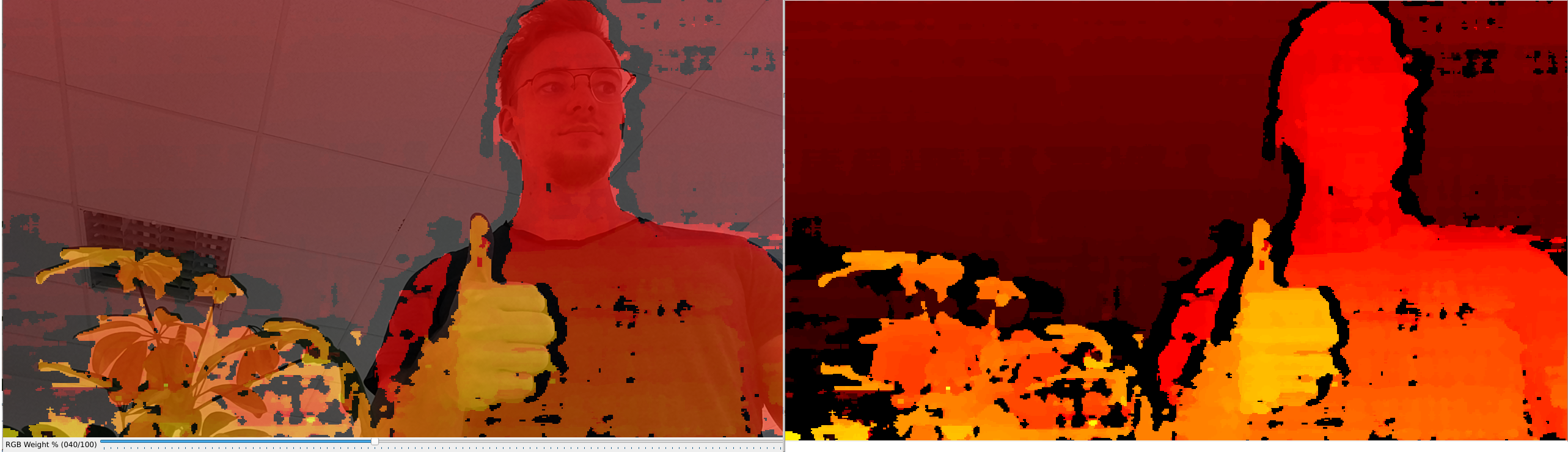

This example shows usage of RGB depth alignment. Since OAK-D has a color and a pair of stereo cameras, you can align depth map to the color frame on top of that to get RGB depth.

In this example, rgb and depth aren’t perfectly in sync. For that, you would need to add Software syncing, which has been added to the demo here, where RGB and depth frames have sub-ms delay.

By default, the depth map will get scaled to match the resolution of the camera sensor we want to align to. In other words, if

depth is aligned to the 1080P color sensor, StereoDepth will upscale depth to 1080P as well.

Depth scaling can be avoided by configuring StereoDepth’s stereo.setOutputSize(width, height).

To align depth with higher resolution color stream (eg. 12MP), you need to limit the resolution of the depth map. You can

do that with stereo.setOutputSize(w,h). Code example here.

Host alignment¶

StereoDepth node aligns depth map to selected sensor (in this case, color sensor), on the OAK device itself. One can also do the same on the host side. We have developed a simple demo script here.

Demo¶

Setup¶

Please run the install script to download all required dependencies. Please note that this script must be ran from git context, so you have to download the depthai-python repository first and then run the script

git clone https://github.com/luxonis/depthai-python.git

cd depthai-python/examples

python3 install_requirements.py

For additional information, please follow installation guide

Source code¶

Also available on GitHub

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 | #!/usr/bin/env python3

import cv2

import numpy as np

import depthai as dai

import argparse

# Weights to use when blending depth/rgb image (should equal 1.0)

rgbWeight = 0.4

depthWeight = 0.6

parser = argparse.ArgumentParser()

parser.add_argument('-alpha', type=float, default=None, help="Alpha scaling parameter to increase float. [0,1] valid interval.")

args = parser.parse_args()

alpha = args.alpha

def updateBlendWeights(percent_rgb):

"""

Update the rgb and depth weights used to blend depth/rgb image

@param[in] percent_rgb The rgb weight expressed as a percentage (0..100)

"""

global depthWeight

global rgbWeight

rgbWeight = float(percent_rgb)/100.0

depthWeight = 1.0 - rgbWeight

fps = 30

# The disparity is computed at this resolution, then upscaled to RGB resolution

monoResolution = dai.MonoCameraProperties.SensorResolution.THE_720_P

# Create pipeline

pipeline = dai.Pipeline()

device = dai.Device()

queueNames = []

# Define sources and outputs

camRgb = pipeline.create(dai.node.Camera)

left = pipeline.create(dai.node.MonoCamera)

right = pipeline.create(dai.node.MonoCamera)

stereo = pipeline.create(dai.node.StereoDepth)

rgbOut = pipeline.create(dai.node.XLinkOut)

disparityOut = pipeline.create(dai.node.XLinkOut)

rgbOut.setStreamName("rgb")

queueNames.append("rgb")

disparityOut.setStreamName("disp")

queueNames.append("disp")

#Properties

rgbCamSocket = dai.CameraBoardSocket.CAM_A

camRgb.setBoardSocket(rgbCamSocket)

camRgb.setSize(1280, 720)

camRgb.setFps(fps)

# For now, RGB needs fixed focus to properly align with depth.

# This value was used during calibration

try:

calibData = device.readCalibration2()

lensPosition = calibData.getLensPosition(rgbCamSocket)

if lensPosition:

camRgb.initialControl.setManualFocus(lensPosition)

except:

raise

left.setResolution(monoResolution)

left.setCamera("left")

left.setFps(fps)

right.setResolution(monoResolution)

right.setCamera("right")

right.setFps(fps)

stereo.setDefaultProfilePreset(dai.node.StereoDepth.PresetMode.HIGH_DENSITY)

# LR-check is required for depth alignment

stereo.setLeftRightCheck(True)

stereo.setDepthAlign(rgbCamSocket)

# Linking

camRgb.video.link(rgbOut.input)

left.out.link(stereo.left)

right.out.link(stereo.right)

stereo.disparity.link(disparityOut.input)

camRgb.setMeshSource(dai.CameraProperties.WarpMeshSource.CALIBRATION)

if alpha is not None:

camRgb.setCalibrationAlpha(alpha)

stereo.setAlphaScaling(alpha)

# Connect to device and start pipeline

with device:

device.startPipeline(pipeline)

frameRgb = None

frameDisp = None

# Configure windows; trackbar adjusts blending ratio of rgb/depth

rgbWindowName = "rgb"

depthWindowName = "depth"

blendedWindowName = "rgb-depth"

cv2.namedWindow(rgbWindowName)

cv2.namedWindow(depthWindowName)

cv2.namedWindow(blendedWindowName)

cv2.createTrackbar('RGB Weight %', blendedWindowName, int(rgbWeight*100), 100, updateBlendWeights)

while True:

latestPacket = {}

latestPacket["rgb"] = None

latestPacket["disp"] = None

queueEvents = device.getQueueEvents(("rgb", "disp"))

for queueName in queueEvents:

packets = device.getOutputQueue(queueName).tryGetAll()

if len(packets) > 0:

latestPacket[queueName] = packets[-1]

if latestPacket["rgb"] is not None:

frameRgb = latestPacket["rgb"].getCvFrame()

cv2.imshow(rgbWindowName, frameRgb)

if latestPacket["disp"] is not None:

frameDisp = latestPacket["disp"].getFrame()

maxDisparity = stereo.initialConfig.getMaxDisparity()

# Optional, extend range 0..95 -> 0..255, for a better visualisation

if 1: frameDisp = (frameDisp * 255. / maxDisparity).astype(np.uint8)

# Optional, apply false colorization

if 1: frameDisp = cv2.applyColorMap(frameDisp, cv2.COLORMAP_HOT)

frameDisp = np.ascontiguousarray(frameDisp)

cv2.imshow(depthWindowName, frameDisp)

# Blend when both received

if frameRgb is not None and frameDisp is not None:

# Need to have both frames in BGR format before blending

if len(frameDisp.shape) < 3:

frameDisp = cv2.cvtColor(frameDisp, cv2.COLOR_GRAY2BGR)

blended = cv2.addWeighted(frameRgb, rgbWeight, frameDisp, depthWeight, 0)

cv2.imshow(blendedWindowName, blended)

frameRgb = None

frameDisp = None

if cv2.waitKey(1) == ord('q'):

break

|

Also available on GitHub

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 | #include <cstdio>

#include <iostream>

#include "utility.hpp"

// Includes common necessary includes for development using depthai library

#include "depthai/depthai.hpp"

// Optional. If set (true), the ColorCamera is downscaled from 1080p to 720p.

// Otherwise (false), the aligned depth is automatically upscaled to 1080p

static std::atomic<bool> downscaleColor{true};

static constexpr int fps = 30;

// The disparity is computed at this resolution, then upscaled to RGB resolution

static constexpr auto monoRes = dai::MonoCameraProperties::SensorResolution::THE_720_P;

static float rgbWeight = 0.4f;

static float depthWeight = 0.6f;

static void updateBlendWeights(int percentRgb, void* ctx) {

rgbWeight = float(percentRgb) / 100.f;

depthWeight = 1.f - rgbWeight;

}

int main() {

using namespace std;

// Create pipeline

dai::Pipeline pipeline;

dai::Device device;

std::vector<std::string> queueNames;

// Define sources and outputs

auto camRgb = pipeline.create<dai::node::ColorCamera>();

auto left = pipeline.create<dai::node::MonoCamera>();

auto right = pipeline.create<dai::node::MonoCamera>();

auto stereo = pipeline.create<dai::node::StereoDepth>();

auto rgbOut = pipeline.create<dai::node::XLinkOut>();

auto depthOut = pipeline.create<dai::node::XLinkOut>();

rgbOut->setStreamName("rgb");

queueNames.push_back("rgb");

depthOut->setStreamName("depth");

queueNames.push_back("depth");

// Properties

camRgb->setBoardSocket(dai::CameraBoardSocket::CAM_A);

camRgb->setResolution(dai::ColorCameraProperties::SensorResolution::THE_1080_P);

camRgb->setFps(fps);

if(downscaleColor) camRgb->setIspScale(2, 3);

// For now, RGB needs fixed focus to properly align with depth.

// This value was used during calibration

try {

auto calibData = device.readCalibration2();

auto lensPosition = calibData.getLensPosition(dai::CameraBoardSocket::CAM_A);

if(lensPosition) {

camRgb->initialControl.setManualFocus(lensPosition);

}

} catch(const std::exception& ex) {

std::cout << ex.what() << std::endl;

return 1;

}

left->setResolution(monoRes);

left->setCamera("left");

left->setFps(fps);

right->setResolution(monoRes);

right->setCamera("right");

right->setFps(fps);

stereo->setDefaultProfilePreset(dai::node::StereoDepth::PresetMode::HIGH_DENSITY);

// LR-check is required for depth alignment

stereo->setLeftRightCheck(true);

stereo->setDepthAlign(dai::CameraBoardSocket::CAM_A);

// Linking

camRgb->isp.link(rgbOut->input);

left->out.link(stereo->left);

right->out.link(stereo->right);

stereo->disparity.link(depthOut->input);

// Connect to device and start pipeline

device.startPipeline(pipeline);

// Sets queues size and behavior

for(const auto& name : queueNames) {

device.getOutputQueue(name, 4, false);

}

std::unordered_map<std::string, cv::Mat> frame;

auto rgbWindowName = "rgb";

auto depthWindowName = "depth";

auto blendedWindowName = "rgb-depth";

cv::namedWindow(rgbWindowName);

cv::namedWindow(depthWindowName);

cv::namedWindow(blendedWindowName);

int defaultValue = (int)(rgbWeight * 100);

cv::createTrackbar("RGB Weight %", blendedWindowName, &defaultValue, 100, updateBlendWeights);

while(true) {

std::unordered_map<std::string, std::shared_ptr<dai::ImgFrame>> latestPacket;

auto queueEvents = device.getQueueEvents(queueNames);

for(const auto& name : queueEvents) {

auto packets = device.getOutputQueue(name)->tryGetAll<dai::ImgFrame>();

auto count = packets.size();

if(count > 0) {

latestPacket[name] = packets[count - 1];

}

}

for(const auto& name : queueNames) {

if(latestPacket.find(name) != latestPacket.end()) {

if(name == depthWindowName) {

frame[name] = latestPacket[name]->getFrame();

auto maxDisparity = stereo->initialConfig.getMaxDisparity();

// Optional, extend range 0..95 -> 0..255, for a better visualisation

if(1) frame[name].convertTo(frame[name], CV_8UC1, 255. / maxDisparity);

// Optional, apply false colorization

if(1) cv::applyColorMap(frame[name], frame[name], cv::COLORMAP_HOT);

} else {

frame[name] = latestPacket[name]->getCvFrame();

}

cv::imshow(name, frame[name]);

}

}

// Blend when both received

if(frame.find(rgbWindowName) != frame.end() && frame.find(depthWindowName) != frame.end()) {

// Need to have both frames in BGR format before blending

if(frame[depthWindowName].channels() < 3) {

cv::cvtColor(frame[depthWindowName], frame[depthWindowName], cv::COLOR_GRAY2BGR);

}

cv::Mat blended;

cv::addWeighted(frame[rgbWindowName], rgbWeight, frame[depthWindowName], depthWeight, 0, blended);

cv::imshow(blendedWindowName, blended);

frame.clear();

}

int key = cv::waitKey(1);

if(key == 'q' || key == 'Q') {

return 0;

}

}

return 0;

}

|