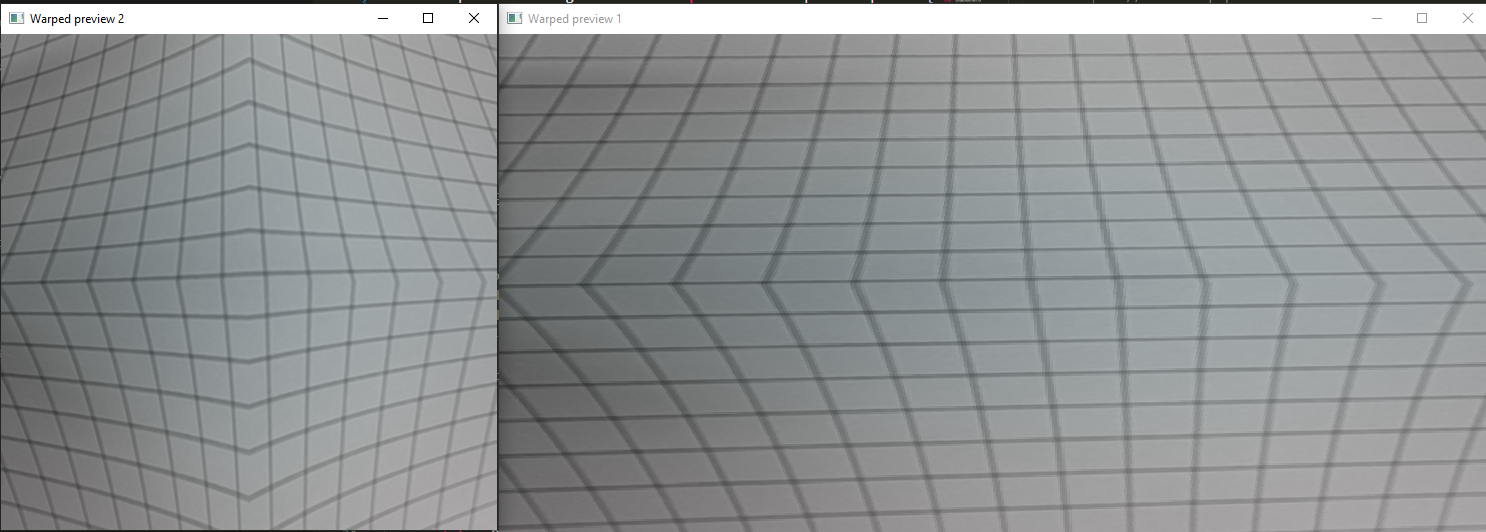

Warp Mesh¶

This example shows usage of Warp node to warp the input image frame.

Setup¶

Please run the install script to download all required dependencies. Please note that this script must be ran from git context, so you have to download the depthai-python repository first and then run the script

git clone https://github.com/luxonis/depthai-python.git

cd depthai-python/examples

python3 install_requirements.py

For additional information, please follow installation guide

Source code¶

Also available on GitHub

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 | #!/usr/bin/env python3

import cv2

import depthai as dai

import numpy as np

# Create pipeline

pipeline = dai.Pipeline()

camRgb = pipeline.create(dai.node.ColorCamera)

camRgb.setPreviewSize(496, 496)

camRgb.setInterleaved(False)

maxFrameSize = camRgb.getPreviewWidth() * camRgb.getPreviewHeight() * 3

# Warp preview frame 1

warp1 = pipeline.create(dai.node.Warp)

# Create a custom warp mesh

tl = dai.Point2f(20, 20)

tr = dai.Point2f(460, 20)

ml = dai.Point2f(100, 250)

mr = dai.Point2f(400, 250)

bl = dai.Point2f(20, 460)

br = dai.Point2f(460, 460)

warp1.setWarpMesh([tl,tr,ml,mr,bl,br], 2, 3)

WARP1_OUTPUT_FRAME_SIZE = (992,500)

warp1.setOutputSize(WARP1_OUTPUT_FRAME_SIZE)

warp1.setMaxOutputFrameSize(WARP1_OUTPUT_FRAME_SIZE[0] * WARP1_OUTPUT_FRAME_SIZE[1] * 3)

warp1.setHwIds([1])

warp1.setInterpolation(dai.Interpolation.NEAREST_NEIGHBOR)

camRgb.preview.link(warp1.inputImage)

xout1 = pipeline.create(dai.node.XLinkOut)

xout1.setStreamName('out1')

warp1.out.link(xout1.input)

# Warp preview frame 2

warp2 = pipeline.create(dai.node.Warp)

# Create a custom warp mesh

mesh2 = [

(20, 20), (250, 100), (460, 20),

(100, 250), (250, 250), (400, 250),

(20, 480), (250, 400), (460,480)

]

warp2.setWarpMesh(mesh2, 3, 3)

warp2.setMaxOutputFrameSize(maxFrameSize)

warp1.setHwIds([2])

warp2.setInterpolation(dai.Interpolation.BICUBIC)

camRgb.preview.link(warp2.inputImage)

xout2 = pipeline.create(dai.node.XLinkOut)

xout2.setStreamName('out2')

warp2.out.link(xout2.input)

# Connect to device and start pipeline

with dai.Device(pipeline) as device:

# Output queue will be used to get the rgb frames from the output defined above

q1 = device.getOutputQueue(name="out1", maxSize=8, blocking=False)

q2 = device.getOutputQueue(name="out2", maxSize=8, blocking=False)

while True:

in1 = q1.get()

if in1 is not None:

cv2.imshow("Warped preview 1", in1.getCvFrame())

in2 = q2.get()

if in2 is not None:

cv2.imshow("Warped preview 2", in2.getCvFrame())

if cv2.waitKey(1) == ord('q'):

break

|

Also available on GitHub

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 | #include <iostream>

// Inludes common necessary includes for development using depthai library

#include "depthai/depthai.hpp"

int main() {

using namespace std;

// Create pipeline

dai::Pipeline pipeline;

auto camRgb = pipeline.create<dai::node::ColorCamera>();

camRgb->setPreviewSize(496, 496);

camRgb->setInterleaved(false);

auto maxFrameSize = camRgb->getPreviewWidth() * camRgb->getPreviewHeight() * 3;

// Warp preview frame 1

auto warp1 = pipeline.create<dai::node::Warp>();

// Create a custom warp mesh

dai::Point2f tl(20, 20);

dai::Point2f tr(460, 20);

dai::Point2f ml(100, 250);

dai::Point2f mr(400, 250);

dai::Point2f bl(20, 460);

dai::Point2f br(460, 460);

warp1->setWarpMesh({tl, tr, ml, mr, bl, br}, 2, 3);

constexpr std::tuple<int, int> WARP1_OUTPUT_FRAME_SIZE = {992, 500};

warp1->setOutputSize(WARP1_OUTPUT_FRAME_SIZE);

warp1->setMaxOutputFrameSize(std::get<0>(WARP1_OUTPUT_FRAME_SIZE) * std::get<1>(WARP1_OUTPUT_FRAME_SIZE) * 3);

warp1->setInterpolation(dai::Interpolation::NEAREST_NEIGHBOR);

warp1->setHwIds({1});

camRgb->preview.link(warp1->inputImage);

auto xout1 = pipeline.create<dai::node::XLinkOut>();

xout1->setStreamName("out1");

warp1->out.link(xout1->input);

// Warp preview frame 2

auto warp2 = pipeline.create<dai::node::Warp>();

// Create a custom warp mesh

// clang-format off

std::vector<dai::Point2f> mesh2 = {

{20, 20}, {250, 100}, {460, 20},

{100,250}, {250, 250}, {400, 250},

{20, 480}, {250,400}, {460,480}

};

// clang-format on

warp2->setWarpMesh(mesh2, 3, 3);

warp2->setMaxOutputFrameSize(maxFrameSize);

warp2->setInterpolation(dai::Interpolation::BICUBIC);

warp2->setHwIds({2});

camRgb->preview.link(warp2->inputImage);

auto xout2 = pipeline.create<dai::node::XLinkOut>();

xout2->setStreamName("out2");

warp2->out.link(xout2->input);

dai::Device device(pipeline);

auto q1 = device.getOutputQueue("out1", 8, false);

auto q2 = device.getOutputQueue("out2", 8, false);

while(true) {

auto in1 = q1->get<dai::ImgFrame>();

if(in1) {

cv::imshow("Warped preview 1", in1->getCvFrame());

}

auto in2 = q2->get<dai::ImgFrame>();

if(in2) {

cv::imshow("Warped preview 2", in2->getCvFrame());

}

int key = cv::waitKey(1);

if(key == 'q' || key == 'Q') return 0;

}

return 0;

}

|