Multiple devices¶

For many applications it’s useful to have multiple OAK cameras running at the same time, as more cameras can perceive more (of the world around them). Examples here would include:

Box measurement app, multiple cameras from multiple different perspectives could provide better dimension estimation

People counter/tracker app, multiple cameras could count/track people across a large area (eg. shopping mall)

Attaching multiple cameras on front/back/left/right side of your robot for full 360° vision, so your robot can perceive the whole area around it regardless of how it’s positioned.

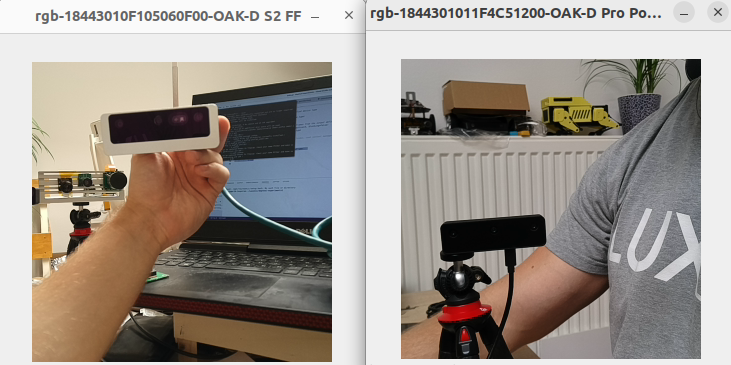

This example shows how you can use multiple OAK cameras on a single host. The demo will find all devices connected to the host computer and display an RGB preview from each of the camera.

An demo application that does object detection from multiple cameras can be found here.

Demo¶

Two OAK cameras looking at each other.¶

===Connected to 18443010F105060F00

>>> MXID: 18443010F105060F00

>>> Num of cameras: 3

>>> USB speed: UsbSpeed.SUPER

>>> Board name: DM9098

>>> Product name: OAK-D S2 FF

===Connected to 1844301011F4C51200

>>> MXID: 1844301011F4C51200

>>> Num of cameras: 3

>>> USB speed: UsbSpeed.UNKNOWN

>>> Board name: NG9097

>>> Product name: OAK-D Pro PoE AF

Setup¶

Please run the install script to download all required dependencies. Please note that this script must be ran from git context, so you have to download the depthai-python repository first and then run the script

git clone https://github.com/luxonis/depthai-python.git

cd depthai-python/examples

python3 install_requirements.py

For additional information, please follow installation guide

This example script requires external file(s) to run. If you are using:

depthai-python, run

python3 examples/install_requirements.pyto download required file(s)dephtai-core, required file(s) will get downloaded automatically when building the example

Source code¶

Also available on GitHub

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 | #!/usr/bin/env python3

import cv2

import depthai as dai

import contextlib

def createPipeline():

# Start defining a pipeline

pipeline = dai.Pipeline()

# Define a source - color camera

camRgb = pipeline.create(dai.node.ColorCamera)

camRgb.setPreviewSize(300, 300)

camRgb.setBoardSocket(dai.CameraBoardSocket.CAM_A)

camRgb.setResolution(dai.ColorCameraProperties.SensorResolution.THE_1080_P)

camRgb.setInterleaved(False)

# Create output

xoutRgb = pipeline.create(dai.node.XLinkOut)

xoutRgb.setStreamName("rgb")

camRgb.preview.link(xoutRgb.input)

return pipeline

with contextlib.ExitStack() as stack:

deviceInfos = dai.Device.getAllAvailableDevices()

usbSpeed = dai.UsbSpeed.SUPER

openVinoVersion = dai.OpenVINO.Version.VERSION_2021_4

qRgbMap = []

devices = []

for deviceInfo in deviceInfos:

deviceInfo: dai.DeviceInfo

device: dai.Device = stack.enter_context(dai.Device(openVinoVersion, deviceInfo, usbSpeed))

devices.append(device)

print("===Connected to ", deviceInfo.getMxId())

mxId = device.getMxId()

cameras = device.getConnectedCameras()

usbSpeed = device.getUsbSpeed()

eepromData = device.readCalibration2().getEepromData()

print(" >>> MXID:", mxId)

print(" >>> Num of cameras:", len(cameras))

print(" >>> USB speed:", usbSpeed)

if eepromData.boardName != "":

print(" >>> Board name:", eepromData.boardName)

if eepromData.productName != "":

print(" >>> Product name:", eepromData.productName)

pipeline = createPipeline()

device.startPipeline(pipeline)

# Output queue will be used to get the rgb frames from the output defined above

q_rgb = device.getOutputQueue(name="rgb", maxSize=4, blocking=False)

stream_name = "rgb-" + mxId + "-" + eepromData.productName

qRgbMap.append((q_rgb, stream_name))

while True:

for q_rgb, stream_name in qRgbMap:

if q_rgb.has():

cv2.imshow(stream_name, q_rgb.get().getCvFrame())

if cv2.waitKey(1) == ord('q'):

break

|

Also available on GitHub

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 | #include <chrono>

#include <iostream>

// Includes common necessary includes for development using depthai library

#include "depthai/depthai.hpp"

std::shared_ptr<dai::Pipeline> createPipeline() {

// Start defining a pipeline

auto pipeline = std::make_shared<dai::Pipeline>();

// Define a source - color camera

auto camRgb = pipeline->create<dai::node::ColorCamera>();

camRgb->setPreviewSize(300, 300);

camRgb->setBoardSocket(dai::CameraBoardSocket::CAM_A);

camRgb->setResolution(dai::ColorCameraProperties::SensorResolution::THE_1080_P);

camRgb->setInterleaved(false);

// Create output

auto xoutRgb = pipeline->create<dai::node::XLinkOut>();

xoutRgb->setStreamName("rgb");

camRgb->preview.link(xoutRgb->input);

return pipeline;

}

int main(int argc, char** argv) {

auto deviceInfoVec = dai::Device::getAllAvailableDevices();

const auto usbSpeed = dai::UsbSpeed::SUPER;

auto openVinoVersion = dai::OpenVINO::Version::VERSION_2021_4;

std::map<std::string, std::shared_ptr<dai::DataOutputQueue>> qRgbMap;

std::vector<std::shared_ptr<dai::Device>> devices;

for(auto& deviceInfo : deviceInfoVec) {

auto device = std::make_shared<dai::Device>(openVinoVersion, deviceInfo, usbSpeed);

devices.push_back(device);

std::cout << "===Connected to " << deviceInfo.getMxId() << std::endl;

auto mxId = device->getMxId();

auto cameras = device->getConnectedCameras();

auto usbSpeed = device->getUsbSpeed();

auto eepromData = device->readCalibration2().getEepromData();

std::cout << " >>> MXID:" << mxId << std::endl;

std::cout << " >>> Num of cameras:" << cameras.size() << std::endl;

std::cout << " >>> USB speed:" << usbSpeed << std::endl;

if(eepromData.boardName != "") {

std::cout << " >>> Board name:" << eepromData.boardName << std::endl;

}

if(eepromData.productName != "") {

std::cout << " >>> Product name:" << eepromData.productName << std::endl;

}

auto pipeline = createPipeline();

device->startPipeline(*pipeline);

auto qRgb = device->getOutputQueue("rgb", 4, false);

std::string streamName = "rgb-" + eepromData.productName + mxId;

qRgbMap.insert({streamName, qRgb});

}

while(true) {

for(auto& element : qRgbMap) {

auto qRgb = element.second;

auto streamName = element.first;

auto inRgb = qRgb->tryGet<dai::ImgFrame>();

if(inRgb != nullptr) {

cv::imshow(streamName, inRgb->getCvFrame());

}

}

int key = cv::waitKey(1);

if(key == 'q' || key == 'Q') {

return 0;

}

}

return 0;

}

|