Script forward frames¶

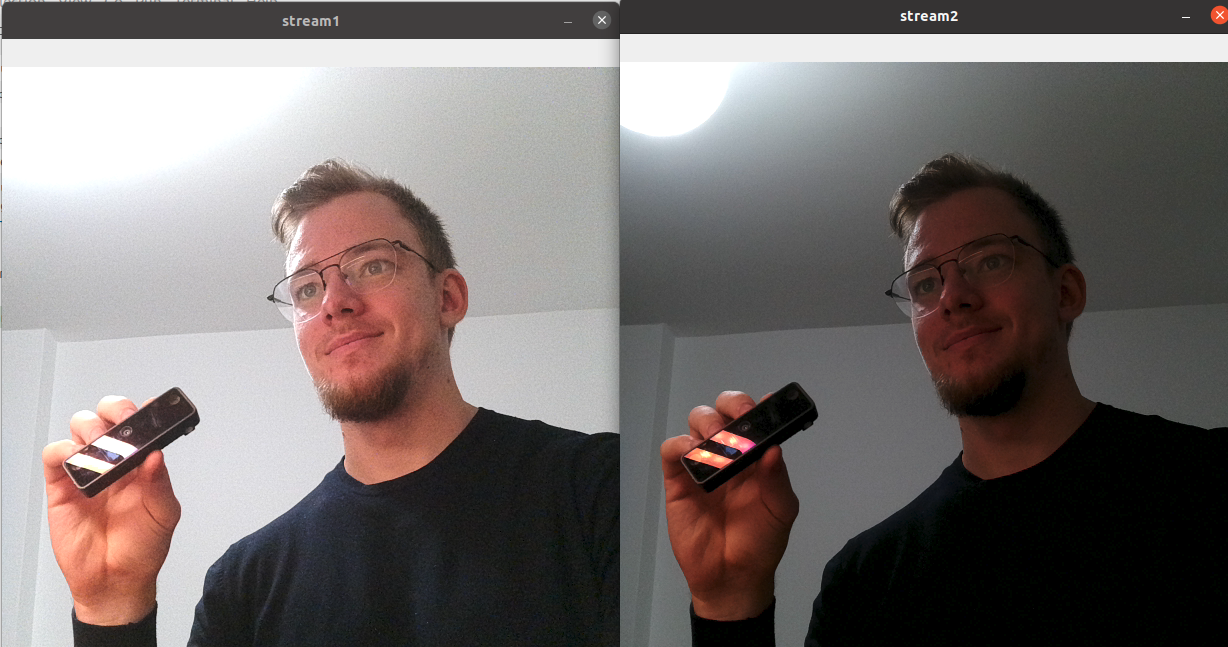

This example shows how to use Script node to forward (demultiplex) frames to two different outputs - in this case directly to two XLinkOut nodes. Script also changes exposure ratio for each frame, which results in two streams, one lighter and one darker.

Demo¶

Setup¶

Please run the install script to download all required dependencies. Please note that this script must be ran from git context, so you have to download the depthai-python repository first and then run the script

git clone https://github.com/luxonis/depthai-python.git

cd depthai-python/examples

python3 install_requirements.py

For additional information, please follow installation guide

Source code¶

Also available on GitHub

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 | #!/usr/bin/env python3

import cv2

import depthai as dai

# Start defining a pipeline

pipeline = dai.Pipeline()

cam = pipeline.create(dai.node.ColorCamera)

# Not needed, you can display 1080P frames as well

cam.setIspScale(1,2)

# Script node

script = pipeline.create(dai.node.Script)

script.setScript("""

ctrl = CameraControl()

ctrl.setCaptureStill(True)

# Initially send still event

node.io['ctrl'].send(ctrl)

normal = True

while True:

frame = node.io['frames'].get()

if normal:

ctrl.setAutoExposureCompensation(3)

node.io['stream1'].send(frame)

normal = False

else:

ctrl.setAutoExposureCompensation(-3)

node.io['stream2'].send(frame)

normal = True

node.io['ctrl'].send(ctrl)

""")

cam.still.link(script.inputs['frames'])

# XLinkOut

xout1 = pipeline.create(dai.node.XLinkOut)

xout1.setStreamName('stream1')

script.outputs['stream1'].link(xout1.input)

xout2 = pipeline.create(dai.node.XLinkOut)

xout2.setStreamName('stream2')

script.outputs['stream2'].link(xout2.input)

script.outputs['ctrl'].link(cam.inputControl)

# Connect to device with pipeline

with dai.Device(pipeline) as device:

qStream1 = device.getOutputQueue("stream1")

qStream2 = device.getOutputQueue("stream2")

while True:

cv2.imshow('stream1', qStream1.get().getCvFrame())

cv2.imshow('stream2', qStream2.get().getCvFrame())

if cv2.waitKey(1) == ord('q'):

break

|

Also available on GitHub

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 | #include <iostream>

// Includes common necessary includes for development using depthai library

#include "depthai/depthai.hpp"

int main() {

using namespace std;

// Start defining a pipeline

dai::Pipeline pipeline;

// Define a source - color camera

auto cam = pipeline.create<dai::node::ColorCamera>();

// Not needed, you can display 1080P frames as well

cam->setIspScale(1, 2);

// Script node

auto script = pipeline.create<dai::node::Script>();

script->setScript(R"(

ctrl = CameraControl()

ctrl.setCaptureStill(True)

# Initially send still event

node.io['ctrl'].send(ctrl)

normal = True

while True:

frame = node.io['frames'].get()

if normal:

ctrl.setAutoExposureCompensation(3)

node.io['stream1'].send(frame)

normal = False

else:

ctrl.setAutoExposureCompensation(-3)

node.io['stream2'].send(frame)

normal = True

node.io['ctrl'].send(ctrl)

)");

cam->still.link(script->inputs["frames"]);

// XLinkOut

auto xout1 = pipeline.create<dai::node::XLinkOut>();

xout1->setStreamName("stream1");

script->outputs["stream1"].link(xout1->input);

auto xout2 = pipeline.create<dai::node::XLinkOut>();

xout2->setStreamName("stream2");

script->outputs["stream2"].link(xout2->input);

// Connections

script->outputs["ctrl"].link(cam->inputControl);

// Connect to device with pipeline

dai::Device device(pipeline);

auto qStream1 = device.getOutputQueue("stream1");

auto qStream2 = device.getOutputQueue("stream2");

while(true) {

cv::imshow("stream1", qStream1->get<dai::ImgFrame>()->getCvFrame());

cv::imshow("stream2", qStream2->get<dai::ImgFrame>()->getCvFrame());

if(cv::waitKey(1) == 'q') {

break;

}

}

}

|